Key Takeaways

- Master essential Generative ChatGPT AI skills: Discover the top 5 skills you need to excel in Generative AI, including Python programming, Docker/Kubernetes, Cloud Computing, Encoder-Decoder Architecture, and Attention Mechanism.

- Unlock innovation with transformer models: Transformer models like BERT revolutionize Generative AI by capturing long-range dependencies and improving language understanding, enabling you to generate contextually relevant and high-quality outputs.

- Stay ahead in the rapidly evolving AI landscape: By acquiring these key skills, you position yourself at the forefront of AI innovation, empowering you to design intelligent systems that generate meaningful and coherent outputs. Stay updated and embrace the latest developments to excel in Generative ChatGPT AI.

Welcome to our comprehensive guide on the top 5 Generative ChatGPT AI skills you need to know.

In today’s digital age, artificial intelligence (AI) has revolutionized the way we interact and communicate. Generative ChatGPT AI, in particular, has gained immense popularity due to its ability to generate human-like text and engage in meaningful conversations.

As businesses and individuals increasingly recognize the potential of Generative ChatGPT AI, it becomes crucial to understand the skills necessary to harness its power effectively.

In this blog, we will explore the top 5 skills that can help you master Generative ChatGPT AI and unlock its full potential.

Mastering the top 5 Generative ChatGPT AI skills discussed in this blog will empower you to harness the full potential of AI-powered conversations.

From natural language understanding to ethical decision-making, each skill plays a crucial role in creating engaging, unbiased, and responsible AI experiences.

So, let’s dive in and explore these skills in detail, equipping ourselves with the knowledge necessary to thrive in the ever-evolving landscape of Generative ChatGPT AI.

Before we venture further into this article, we like to share who we are and what we do.

About 9cv9

9cv9 is a business tech startup based in Singapore and Asia, with a strong presence all over the world.

With over six years of startup and business experience, and being highly involved in connecting with thousands of companies and startups, the 9cv9 team has listed some important learning points in this overview of the guide on the top 5 Generative AI skillsets you should know.

If your company needs recruitment and headhunting services to hire top-quality Generative AI employees, you can use 9cv9 headhunting and recruitment services to hire top talents and candidates. Find out more here, or send over an email to [email protected].

Or just post 1 free job posting here at 9cv9 Hiring Portal in under 10 minutes.

Top 5 Generative ChatGPT AI Skills You Need to Know

- Python

- Docker/Kubernetes and Cloud Computing

- Encoder-Decoder Architecture

- Attention Mechanism

- Transformer Models and BERT Model

1. Python as a Skillset for Generative AI

Python has emerged as a powerhouse programming language in the field of artificial intelligence, including Generative AI.

Its simplicity, versatility, and extensive libraries make it an ideal choice for developing and implementing AI models.

In this section, we will explore why Python is a vital skillset for Generative AI and how it empowers developers to create innovative and cutting-edge AI applications.

- Python’s Rich Ecosystem of Libraries: Python boasts a vast ecosystem of libraries specifically designed for AI and machine learning. Libraries such as TensorFlow, PyTorch, and Keras provide powerful tools for building and training Generative AI models. These libraries offer pre-built functions, algorithms, and neural network architectures that simplify the development process and accelerate experimentation.

For example, TensorFlow, an open-source deep learning library, provides a high-level API called TensorFlow Probability. This library allows developers to build generative models, such as Variational Autoencoders (VAEs) and Generative Adversarial Networks (GANs), with ease.

By leveraging these libraries, developers can focus on the creative aspects of Generative AI without getting bogged down by low-level implementation details.

- Ease of Use and Readability: Python is known for its clean and readable syntax, which makes it easier to understand and maintain code. Its simplicity allows developers to quickly prototype ideas and experiment with different approaches. This agility is particularly valuable in the field of Generative AI, where iterating and fine-tuning models is a common practice.

Moreover, Python’s extensive documentation and active community support contribute to its ease of use. Developers can find a wealth of resources, tutorials, and code examples online, making it easier to learn and apply Python to Generative AI projects.

- NumPy and Pandas for Data Manipulation: Generative AI heavily relies on data manipulation and preprocessing. Python, combined with libraries like NumPy and Pandas, provides powerful tools for handling large datasets efficiently. NumPy, a fundamental library for scientific computing, offers high-performance multidimensional array objects and functions for numerical operations. Pandas, on the other hand, provides data structures and data analysis tools, enabling seamless data manipulation and preprocessing tasks.

For instance, developers can use NumPy arrays to represent and process image or text data, which are common input formats for Generative AI models.

Pandas, with its DataFrame structure, allows developers to handle complex datasets, perform data cleaning, and extract relevant features.

These capabilities enable developers to prepare and preprocess data effectively for training Generative AI models.

- Integration with Deep Learning Frameworks: Python’s compatibility with popular deep learning frameworks, such as TensorFlow and PyTorch, is a significant advantage for Generative AI. These frameworks seamlessly integrate with Python, allowing developers to leverage the full power of deep learning techniques in their generative models.

For example, PyTorch, a widely-used deep learning framework, offers a dynamic computation graph and supports autograd, making it easier to define and train complex generative models.

Developers can implement state-of-the-art architectures like Variational Autoencoders (VAEs) or Transformer models using PyTorch, and then utilize Python’s flexibility to customize and fine-tune these models as per their requirements.

- Visualization and Experimentation: Python’s visualization libraries, such as Matplotlib and Seaborn, enable developers to gain insights and visualize the output of Generative AI models. Visualization plays a vital role in understanding model behavior, identifying patterns, and evaluating model performance.

With Matplotlib, developers can create various types of plots, charts, and visual representations of generated data or model outputs.

Seaborn, on the other hand, provides enhanced statistical visualization capabilities, allowing developers to create informative visualizations to analyze the generative models’ performance and distribution.

Python is an indispensable skillset for Generative AI due to its rich ecosystem of libraries, ease of use, readability, powerful data manipulation capabilities, integration with deep learning frameworks, and visualization tools.

By mastering Python and its associated libraries, developers can unlock their creativity and build advanced Generative AI models that push the boundaries of innovation.

So, whether you’re a seasoned AI developer or just starting your journey in Generative AI, investing time in learning Python will undoubtedly prove to be a valuable asset in your pursuit of creating state-of-the-art AI applications.

2. Docker/Kubernetes and Cloud Computing as Skillsets for Generative AI

In the realm of Generative AI, where the deployment and management of complex AI models are crucial, Docker and Kubernetes have emerged as indispensable skill sets.

Docker provides containerization, while Kubernetes offers orchestration for deploying and scaling AI models efficiently.

In this section, we will explore why Docker and Kubernetes are vital for Generative AI and how they enable seamless deployment and management of AI applications.

- Docker for Containerization: Docker has revolutionized the way applications are packaged and deployed by introducing containerization. Containers provide a lightweight and portable environment that encapsulates all the dependencies, libraries, and configurations required for an application to run consistently across different environments. This is particularly valuable in Generative AI, where complex models, dependencies, and GPU acceleration may be involved.

By containerizing Generative AI models using Docker, developers can ensure consistency in model behavior and simplify the deployment process.

Containers eliminate compatibility issues between different operating systems and infrastructure setups, making it easier to deploy models on various platforms.

This allows developers to focus more on the AI model itself rather than worrying about compatibility concerns.

For example, a developer working on a Generative AI model for image synthesis can package the model, along with its dependencies and pre-trained weights, into a Docker container.

This container can then be easily shared with others or deployed on different machines or cloud platforms, ensuring consistent results and reducing deployment complexity.

- Kubernetes for Orchestration: Kubernetes, often abbreviated as K8s, is an open-source container orchestration platform that simplifies the management, scaling, and monitoring of containerized applications. It provides a robust framework for deploying and managing AI models at scale, making it an invaluable skillset for Generative AI.

With Kubernetes, developers can define the desired state of their AI application using declarative configurations. These configurations specify the resources required, scaling rules, and networking parameters.

Kubernetes then takes care of scheduling containers, managing their lifecycle, and scaling based on demand.

For instance, imagine a scenario where a Generative AI model for natural language processing is deployed using Kubernetes.

As the demand for processing requests fluctuates, Kubernetes automatically scales the application by spawning additional containers or adjusting resources to ensure optimal performance.

This enables the model to handle high traffic and maximize resource utilization efficiently.

- Load Balancing and High Availability: Generative AI models can be computationally intensive and require significant resources, especially when serving a large number of users or handling real-time applications. Docker and Kubernetes offer load balancing and high availability features, ensuring that the AI models are accessible and resilient even under heavy loads.

By leveraging load balancing capabilities, Kubernetes can distribute incoming requests across multiple instances of the AI model, preventing any single instance from becoming overloaded.

This helps maintain smooth performance and minimizes response times.

Additionally, Kubernetes allows developers to set up replicas of AI model containers, ensuring high availability and fault tolerance.

If any container or node fails, Kubernetes automatically reschedules or replaces the failed instance, ensuring uninterrupted service.

- Monitoring and Scaling: Docker and Kubernetes provide robust monitoring and scaling mechanisms, allowing developers to gain insights into the performance and resource utilization of their AI models. This enables proactive management and optimization of Generative AI applications.

Kubernetes offers built-in monitoring tools like Prometheus and Grafana, which provide real-time metrics and visualizations of the AI application’s health, resource usage, and performance.

Developers can set up alerts and notifications to promptly address any issues or performance bottlenecks.

Moreover, Kubernetes’ auto-scaling capabilities empower developers to scale AI model instances based on demand.

By setting up auto-scaling rules, Kubernetes automatically adjusts the number of containers running the AI model, ensuring efficient resource utilization and cost optimization.

Cloud Computing

Cloud computing has revolutionized the field of artificial intelligence, including Generative AI, by providing scalable infrastructure, vast computing resources, and a wide array of services.

Cloud platforms like AWS (Amazon Web Services), Google Cloud, and Microsoft Azure offer powerful tools and services that enable developers to build, deploy, and scale Generative AI applications efficiently.

In this section, we will explore why cloud computing is a vital skillset for Generative AI and how it empowers developers to leverage the full potential of their AI models.

- Scalable Infrastructure: Generative AI models can be computationally intensive and require substantial resources, especially when dealing with large datasets or complex architectures. Cloud computing platforms offer on-demand scalability, allowing developers to quickly scale up or down the resources based on the needs of their AI models.

For example, AWS Elastic Compute Cloud (EC2) provides virtual server instances with various configurations, including high-performance GPUs optimized for AI workloads.

By leveraging EC2 instances, developers can spin up multiple instances to distribute the computational load of training or inference tasks, enabling faster execution and reducing time-to-results.

- GPU Acceleration: Generative AI models often benefit from GPU acceleration due to their ability to parallelize computations. Cloud platforms offer GPU instances, such as AWS P3 instances or Google Cloud NVIDIA GPUs, specifically designed for AI workloads. These instances provide high-performance computing capabilities, enabling developers to train and run complex Generative AI models efficiently.

Using GPU-accelerated cloud instances, developers can take advantage of parallel processing to speed up model training, inference, and experimentation.

This allows for faster iterations and empowers developers to explore more complex models and generate high-quality outputs.

- Pre-built AI Services: Cloud platforms provide pre-built AI services that simplify the development and deployment of Generative AI applications. These services offer ready-to-use APIs and tools for common AI tasks, such as natural language processing, image recognition, and speech synthesis.

For instance, AWS provides Amazon Rekognition for image and video analysis, Amazon Polly for text-to-speech synthesis, and Google Cloud offers Vision API and Text-to-Speech API.

By leveraging these services, developers can incorporate advanced AI capabilities into their Generative AI applications without the need for extensive model development or infrastructure management.

- Data Storage and Management: Generative AI models often require large volumes of data for training and inference. Cloud platforms offer robust and scalable storage solutions, such as AWS S3 (Simple Storage Service) and Google Cloud Storage, that enable developers to store and manage their datasets efficiently.

These storage services provide high durability, low latency, and the ability to handle large-scale data storage and retrieval.

Developers can securely store their training data, models, and other resources in the cloud, ensuring accessibility and ease of use across different environments and devices.

- Serverless Computing: Serverless computing, exemplified by AWS Lambda and Google Cloud Functions, is gaining popularity in the AI community. Serverless platforms abstract away the underlying infrastructure, allowing developers to focus on writing code rather than managing servers or scaling infrastructure.

With serverless computing, developers can deploy small, self-contained functions that respond to specific events or API calls.

This approach is particularly useful for real-time inference in Generative AI applications, where the AI model can be invoked on-demand to generate responses or outputs.

For example, a chatbot powered by a Generative AI model can utilize serverless functions to process user queries and generate responses in real-time.

By leveraging serverless computing, developers can ensure efficient resource utilization and cost optimization, as they only pay for the actual execution time of their functions.

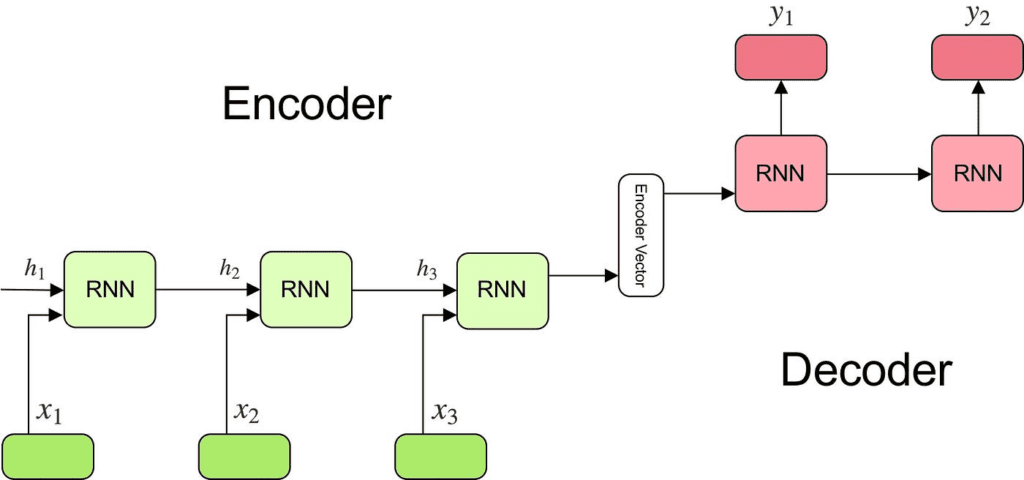

3. Encoder-Decoder Architecture as a Skillset for Generative AI

Encoder-Decoder architecture is a powerful and widely-used technique in the field of Generative AI.

It plays a crucial role in various applications, including natural language processing, image synthesis, and sequence generation.

In this section, we will explore why understanding and mastering the Encoder-Decoder architecture is an essential skillset for Generative AI, and how it enables developers to create sophisticated AI models that generate realistic and meaningful outputs.

- Understanding the Encoder-Decoder Architecture: The Encoder-Decoder architecture is a neural network architecture that consists of two main components: an encoder and a decoder. The encoder is responsible for capturing the input data and transforming it into a lower-dimensional representation called the latent space. The decoder, on the other hand, takes this latent representation and generates the desired output based on it.

For example, in natural language processing, an encoder-decoder architecture can be used for machine translation tasks.

The encoder processes the source language sentence and encodes it into a fixed-length latent representation.

The decoder then takes this representation and generates the translated sentence in the target language.

- Sequence-to-Sequence Models: Encoder-Decoder architectures are particularly effective for sequence-to-sequence tasks, where the input and output are both sequences of data. This includes tasks like machine translation, text summarization, and dialogue generation.

In sequence-to-sequence models, the encoder processes the input sequence (e.g., a sentence) and encodes it into a fixed-length representation.

The decoder then generates the output sequence (e.g., a translated sentence) based on this representation.

This architecture allows the model to capture the contextual information from the input sequence and use it to generate meaningful and coherent output sequences.

- Applications in Image Synthesis: Encoder-Decoder architectures are not limited to natural language processing tasks; they are also widely used in image synthesis applications. One popular example is the Generative Adversarial Network (GAN), which utilizes an encoder-decoder architecture to generate realistic images.

In a GAN, the generator network acts as the decoder, taking a random noise vector from the latent space and generating synthetic images. The discriminator network acts as the encoder, assessing the generated images’ authenticity and providing feedback to the generator for improvement. By iteratively training the generator and discriminator together, GANs can produce high-quality, realistic images that are indistinguishable from real ones.

- Variational Autoencoders (VAEs): Another significant application of the Encoder-Decoder architecture is in Variational Autoencoders (VAEs). VAEs combine the power of encoder-decoder architecture with probabilistic modeling, enabling the generation of new data points with controlled variations.

In a VAE, the encoder maps the input data into a probability distribution in the latent space rather than a single fixed-length representation. The decoder then samples from this distribution to generate new data points. This allows for the generation of diverse outputs by manipulating the distribution’s parameters, such as mean and variance.

VAEs find applications in various domains, such as image generation, music composition, and data augmentation. They enable developers to explore the latent space of the data and generate novel samples with desired characteristics.

- Transfer Learning and Fine-Tuning: Encoder-Decoder architectures also facilitate transfer learning, where a pre-trained model’s knowledge is transferred to a related task with limited labeled data. By leveraging the encoder’s pre-trained weights, developers can initialize the decoder with valuable knowledge, enabling faster convergence and better performance.

For example, in text generation tasks, a pre-trained language model can be used as the encoder, capturing the contextual information from large amounts of text data.

The decoder is then fine-tuned for a specific task, such as generating product descriptions or news articles.

This approach saves computational resources and improves the model’s performance by leveraging the pre-trained knowledge.

Tthe Encoder-Decoder architecture is a fundamental skillset for Generative AI.

Its versatility and wide range of applications make it a valuable tool for developers working on tasks like natural language processing, image synthesis, and sequence generation.

By mastering the Encoder-Decoder architecture, developers can design and train sophisticated AI models that generate realistic and meaningful outputs, pushing the boundaries of innovation in the field of Generative AI.

4. Attention Mechanism as a Skillset for Generative AI

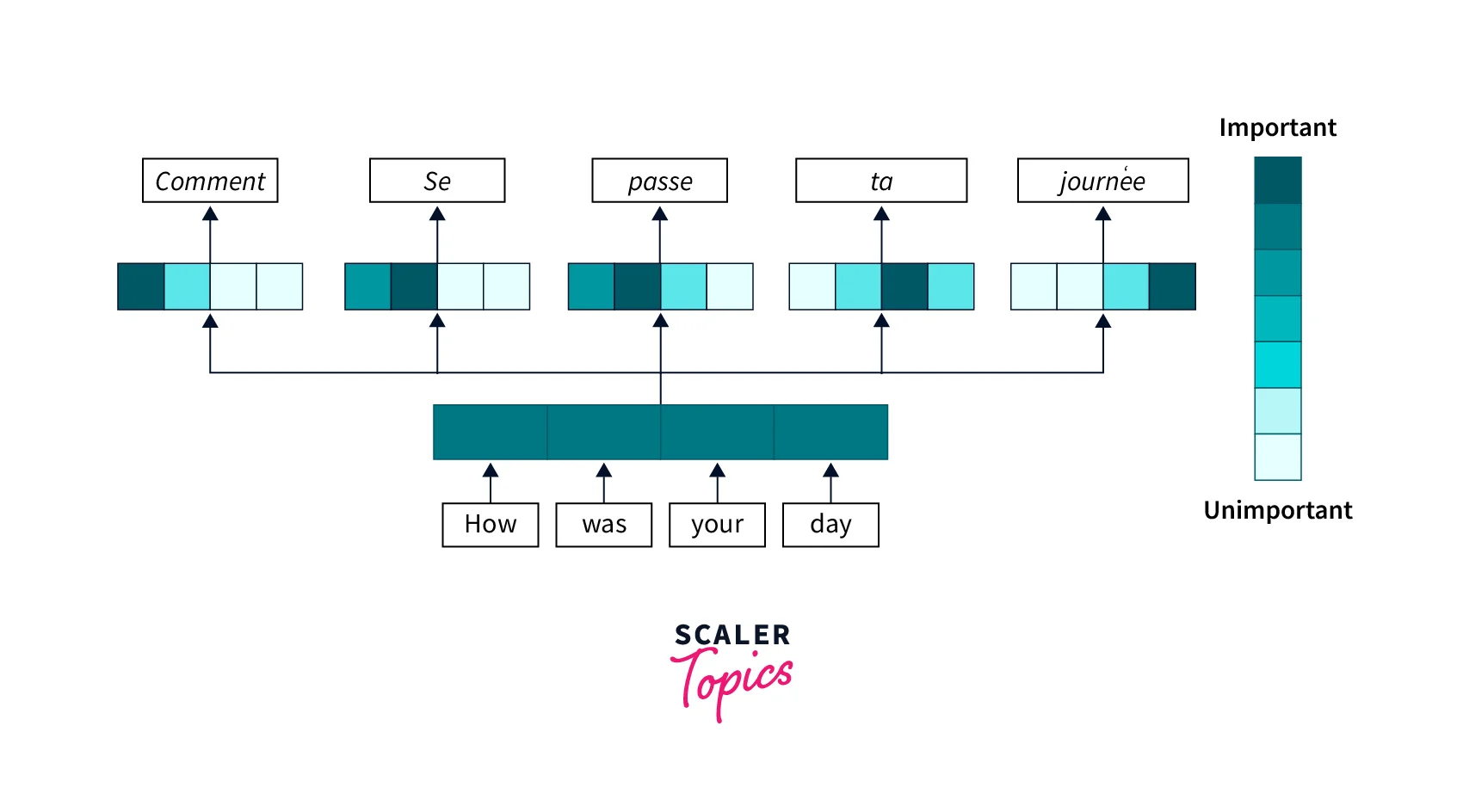

The attention mechanism has emerged as a crucial skillset in the field of Generative AI, revolutionizing tasks such as machine translation, image captioning, and text summarization.

By enabling models to focus on relevant parts of input sequences while generating outputs, the attention mechanism has significantly improved the quality and coherence of generated content.

In this section, we will delve into why understanding and mastering the attention mechanism is essential for Generative AI, and explore its applications and benefits with relevant examples.

- Understanding the Attention Mechanism: The attention mechanism is a neural network component that allows models to selectively focus on different parts of the input sequence when generating each element of the output sequence. Unlike traditional sequence generation models that rely solely on a fixed-length representation of the entire input, the attention mechanism dynamically assigns different weights to different parts of the input sequence, providing more context-aware and accurate generation.

- Applications in Machine Translation: One of the prominent applications of the attention mechanism is in machine translation. Traditionally, machine translation models used fixed-length representations to encode the source sentence, often resulting in suboptimal translations. However, with the attention mechanism, the model can assign varying weights to different words in the source sentence while generating each word of the target sentence, enabling more accurate translations.

For example, in the sentence “Je suis étudiant en informatique” (I am a student of computer science), a machine translation model utilizing the attention mechanism would attend more to the words “étudiant” and “informatique” while generating the corresponding words in the target sentence.

- Image Captioning and Visual Attention: The attention mechanism is not limited to text-based tasks. It has also been successfully applied to image captioning, where a model generates textual descriptions for images. In this scenario, the attention mechanism enables the model to attend to different regions of the image while generating each word of the caption, ensuring that the generated text accurately describes the relevant parts of the image.

For instance, in an image of a dog running in a park, the attention mechanism would focus on the dog while generating the word “dog” and then shift its attention to the park when generating the word “running.”

- Benefits of the Attention Mechanism: The attention mechanism offers several benefits in Generative AI tasks:

- a. Improved Coherence: By attending to different parts of the input sequence, the attention mechanism helps generate outputs that are more contextually relevant and coherent.

- b. Handling Long Sequences: Traditional sequence generation models often struggle with long input sequences due to the fixed-length representation. The attention mechanism allows models to effectively handle long sequences by focusing on the most relevant parts at each step.

- c. Interpretability: The attention weights generated by the mechanism provide insights into which parts of the input sequence are more important for generating specific elements of the output. This interpretability is valuable for understanding model behavior and debugging.

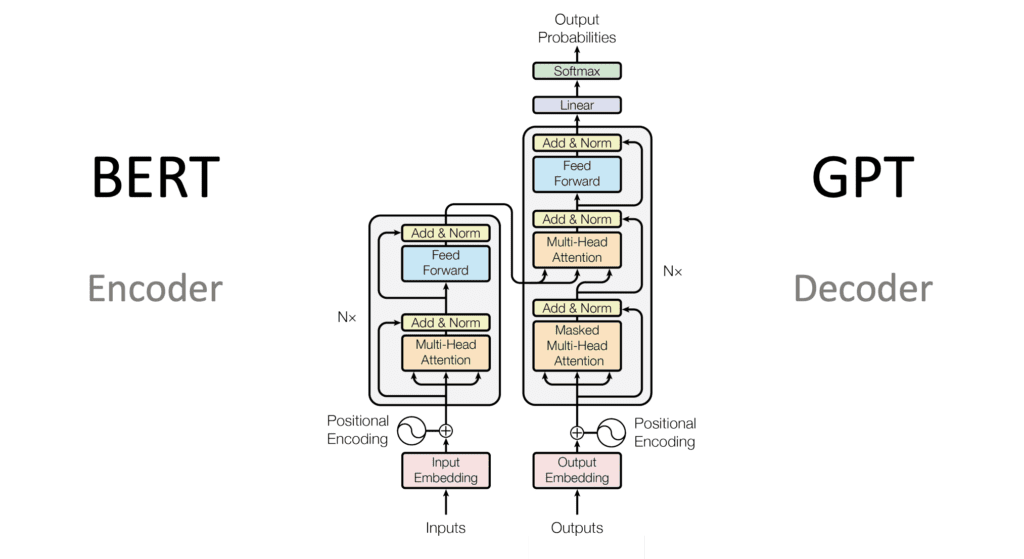

- Transformer Architecture and Self-Attention: The attention mechanism plays a central role in the Transformer architecture, which has become the de facto standard for many Generative AI tasks. The Transformer utilizes a self-attention mechanism, where the model attends to different positions in the input sequence to compute a weighted representation for each position.

For example, in the Transformer-based language model GPT-3, the self-attention mechanism allows the model to capture the dependencies between words in a sentence, resulting in coherent and contextually relevant text generation.

- Customizing Attention Mechanism: Developers can also customize the attention mechanism based on the specific requirements of their Generative AI tasks. They can introduce variations such as multi-head attention, which uses multiple sets of attention weights to capture different aspects of the input sequence, or sparse attention, which limits the attention computation to a subset of the sequence to enhance efficiency.

By experimenting with different variations of the attention mechanism, developers can optimize model performance and address specific challenges posed by their Generative AI tasks.

The attention mechanism is a vital skillset for Generative AI.

Its ability to selectively focus on different parts of the input sequence improves the coherence, accuracy, and interpretability of generated content.

By mastering the attention mechanism and its variations, developers can design and train AI models that excel in tasks like machine translation, image captioning, and text summarization, pushing the boundaries of Generative AI and delivering high-quality, contextually-aware outputs.

To find out more about the Attention Mechanism, have a look at Google’s free Generative AI learning path.

5. Transformer Models and BERT Model as Skillsets for Generative AI

Transformer models, with their groundbreaking architecture, have revolutionized the field of Generative AI.

They have introduced a new paradigm for sequence generation tasks, such as machine translation, text generation, and question answering.

Among the notable transformer models, BERT (Bidirectional Encoder Representations from Transformers) has garnered significant attention for its remarkable language understanding capabilities.

In this section, we will explore why understanding and mastering transformer models, including BERT, is crucial for Generative AI, and discuss their applications and benefits with relevant examples.

- The Power of Transformer Models: Transformer models have emerged as a dominant force in Generative AI due to their ability to capture long-range dependencies in sequences more effectively than traditional recurrent neural networks (RNNs). The transformer architecture employs self-attention mechanisms that allow the model to attend to different positions within the input sequence, enabling it to learn contextual representations and generate coherent and contextually relevant outputs.

- Language Understanding with BERT: BERT, one of the most influential transformer models, has significantly advanced language understanding tasks. BERT utilizes a bidirectional training approach to pre-train a language model on a large corpus of unlabeled text, followed by fine-tuning on specific downstream tasks. This approach enables BERT to capture deep contextual relationships and generate high-quality representations of words and sentences.

For example, in natural language understanding tasks, such as sentiment analysis or named entity recognition, BERT can effectively comprehend the context of a given sentence and provide accurate predictions.

By understanding the nuances and relationships between words and phrases, BERT enables developers to generate more meaningful and context-aware content.

- Sequence-to-Sequence Generation: Transformer models excel in sequence-to-sequence generation tasks, where the model takes an input sequence and generates a corresponding output sequence. These tasks include machine translation, text summarization, and dialogue generation.

For instance, in machine translation, transformer models leverage self-attention mechanisms to attend to different words in the source sentence and generate accurate translations in the target language.

This enables developers to build powerful language translation systems that outperform traditional approaches.

- Pre-training and Transfer Learning: One significant advantage of transformer models like BERT is their pre-training and transfer learning capabilities. Pre-training involves training a language model on a large corpus of unlabeled text data, enabling the model to learn rich representations of words and sentences. These pre-trained models can then be fine-tuned on specific downstream tasks with limited labeled data, allowing developers to achieve excellent performance even with limited training data.

This transfer learning approach saves computational resources and significantly reduces the time and effort required to develop high-performing models.

Developers can leverage pre-trained transformer models like BERT as a starting point and fine-tune them on their specific Generative AI tasks, such as text generation or sentiment analysis.

- Applications in Natural Language Processing: Transformer models, including BERT, have found extensive applications in various natural language processing (NLP) tasks. They have demonstrated remarkable performance in tasks such as text classification, question answering, sentiment analysis, and text summarization.

For example, BERT has shown impressive results in question answering tasks, where it can understand the context of a question and generate accurate answers based on the given passage or document.

This has significant implications for applications like virtual assistants, chatbots, and information retrieval systems.

- Multimodal Generative AI: Transformers are not limited to text-based tasks. They have also been extended to handle multimodal data, combining text with other modalities like images or audio. By integrating transformer models with vision or audio processing networks, developers can build powerful multimodal Generative AI systems.

For instance, in image captioning, a transformer model can utilize the visual information from the image and generate descriptive captions that accurately represent the image content.

This fusion of vision and language using transformer models opens up exciting possibilities for applications like automatic image description and content generation.

Understanding and mastering transformer models, including BERT, is essential for Generative AI.

These models offer powerful language understanding capabilities, enabling developers to generate contextually relevant and high-quality outputs.

Whether it’s machine translation, text summarization, or multimodal generation, transformer models have proven to be instrumental in advancing the field of Generative AI.

By leveraging their pre-training and transfer learning abilities, developers can build sophisticated AI systems that push the boundaries of language understanding and content generation.

Conclusion

The world of Generative AI is evolving rapidly, and mastering the top five skills mentioned in this blog can significantly enhance your capabilities as an AI developer or practitioner.

Python, Docker/Kubernetes, Cloud Computing, Encoder-Decoder Architecture, and Attention Mechanism are the key skillsets that empower you to create advanced and innovative AI models using Generative ChatGPT.

Python serves as the foundational programming language for AI development, offering a vast ecosystem of libraries and tools specifically designed for machine learning and natural language processing tasks.

Its simplicity, flexibility, and extensive community support make it an indispensable skill for anyone venturing into Generative AI.

Docker and Kubernetes skills are essential for effectively managing AI model deployments and orchestrating containerized environments.

These technologies ensure scalability, reproducibility, and portability of your models, making them crucial in production-ready AI systems.

Cloud computing, with platforms like AWS, Google Cloud, and Microsoft Azure, provides the infrastructure and services needed to train and deploy AI models at scale.

Harnessing the power of cloud platforms allows you to access vast computing resources, data storage, and AI-specific services, enabling you to tackle complex Generative AI tasks efficiently.

The Encoder-Decoder architecture lies at the heart of many Generative AI applications, allowing you to capture and transform data into meaningful representations.

Whether it’s natural language processing or image synthesis, understanding the Encoder-Decoder architecture equips you with the knowledge to design sophisticated AI models capable of generating realistic and coherent outputs.

The Attention Mechanism, a crucial component of transformer models, enables your AI systems to focus on relevant parts of input sequences while generating outputs.

This mechanism enhances the coherence, accuracy, and interpretability of generated content, making it an indispensable skill for tasks such as machine translation, image captioning, and text summarization.

Moreover, transformer models, with BERT as a prime example, have revolutionized Generative AI by capturing long-range dependencies and contextual relationships in sequences more effectively.

These models excel in tasks like language understanding, text generation, and question answering, thanks to their advanced architecture and transfer learning capabilities.

By acquiring and honing these top five Generative ChatGPT AI skills, you position yourself at the forefront of AI innovation.

Whether you are a researcher pushing the boundaries of AI technology or a developer building practical AI solutions, these skills empower you to create intelligent systems that generate meaningful, coherent, and contextually-aware outputs.

As the field of Generative AI continues to advance, staying updated with the latest developments and techniques is crucial.

Regularly engaging with the AI community, attending conferences and workshops, and exploring new research papers and resources will help you refine your skills and stay at the cutting edge of Generative AI.

In summary, embracing Python programming, mastering Docker/Kubernetes, leveraging cloud computing, understanding the Encoder-Decoder architecture, and harnessing the power of the Attention Mechanism and transformer models like BERT are the key skills that can propel your Generative ChatGPT AI endeavors to new heights.

Embrace these skills, practice them diligently, and unleash your creativity to develop intelligent and innovative AI systems that shape the future of Generative AI.

If your company needs HR, hiring, or corporate services, you can use 9cv9 hiring and recruitment services. Book a consultation slot here, or send over an email to [email protected].

If you find this article useful, why not share it with your hiring manager and C-level suite friends and also leave a nice comment below?

We, at the 9cv9 Research Team, strive to bring the latest and most meaningful data, guides, and statistics to your doorstep.

To get access to top-quality guides, click over to 9cv9 Blog.

If you need to hire amazing Generative AI engineers, then we recommend Vietnam as the world’s top destination to hire. Read more on our guide “Why Vietnam is a hot destination for Hiring Generative AI ChatGPT Engineers” to learn more.

People Also Ask

What are generative AI examples?

Generative AI encompasses various examples, such as image synthesis (creating realistic images), text generation (automated writing or dialogue), music composition (producing original melodies), and video game level design (creating game environments). It involves teaching AI models to generate new content that resembles human-created data, pushing the boundaries of creativity and innovation.

What are examples of attention mechanisms?

Attention mechanisms find applications in various fields. Examples include machine translation (focusing on relevant words during translation), image captioning (highlighting salient image regions for description), sentiment analysis (emphasizing crucial words for sentiment classification), and speech recognition (attending to important acoustic features for accurate transcription). Attention enhances model performance by selectively focusing on relevant information.

What is BERT Google AI?

BERT (Bidirectional Encoder Representations from Transformers) is a Google-developed language model that revolutionized natural language understanding tasks. It utilizes a transformer architecture and pre-training on large amounts of unlabeled text data to generate rich word and sentence representations. BERT has achieved state-of-the-art performance in various NLP tasks, including question answering and text classification.

![Writing A Good CV [6 Tips To Improve Your CV] 6 Tips To Improve Your CV](https://blog.9cv9.com/wp-content/uploads/2020/06/2020-06-02-2-100x70.png)