Key Takeaways

- PyTorch, TensorFlow, and JAX lead global adoption, offering unmatched flexibility, performance, and research-to-production workflows.

- Enterprise-focused platforms like Amazon SageMaker, Google Vertex AI, and Azure ML dominate large-scale, secure AI deployments.

- Efficiency, scalability, and support for small models and agentic AI are key trends shaping deep learning software innovation in 2026.

The landscape of artificial intelligence has evolved rapidly, and in 2026, deep learning stands at the forefront of technological innovation across every major industry—from healthcare and automotive to finance, robotics, and natural language processing. As organizations accelerate their digital transformation strategies, selecting the right deep learning software has become more critical than ever. With hundreds of AI tools and frameworks available in the market, each offering different capabilities in training speed, model optimization, scalability, and enterprise deployment, it’s increasingly difficult for decision-makers to know where to begin.

This comprehensive guide ranks and analyses the top 10 deep learning software platforms in the world in 2026, offering expert insights into the tools shaping the next generation of intelligent systems. From open-source frameworks trusted by academic researchers to enterprise-grade platforms tailored for large-scale production environments, each software tool on this list has been evaluated based on multiple criteria including performance benchmarks, flexibility, ease of integration, pricing, real-world use cases, and developer community adoption.

In 2026, the deep learning software market is defined by three dominant trends:

- The rise of foundation models (like GPT, Llama, and Gemini) has redefined how deep learning tools are built, fine-tuned, and served.

- The shift toward hybrid model workflows, combining edge computing, on-premise resources, and cloud-based deployment pipelines.

- The growing demand for energy-efficient inference and responsible AI tooling, which has led to innovative software features that prioritize sustainability, transparency, and data privacy.

Industry leaders such as PyTorch, TensorFlow, and JAX continue to evolve with new compiler optimizations and support for dynamic model architectures. At the same time, enterprise-focused platforms like Amazon SageMaker, Google Cloud Vertex AI, Microsoft Azure Machine Learning, and Databricks Mosaic AI are expanding their capabilities to include native support for agent-based systems, AutoML pipelines, and large model training at scale. Furthermore, specialized tools such as DataRobot and NVIDIA AI Enterprise are pushing the boundaries of automation, performance, and deployment flexibility for large organizations with mission-critical AI use cases.

Whether you’re a data scientist building your next computer vision model, an ML engineer deploying large language models in production, or a business leader evaluating AI software for your organization, this guide provides an in-depth breakdown of the 10 most impactful deep learning platforms in 2026.

To help you make the most informed decision, each platform is profiled across the following dimensions:

- Core features and functionalities

- Best use cases and industry applications

- Pricing models and licensing flexibility

- Integration with popular ML workflows and cloud providers

- Performance benchmarks from real-world inference tests

- Community support, documentation quality, and user satisfaction ratings

The goal of this article is to serve as a definitive reference point for understanding the deep learning software ecosystem in 2026. With global AI investments expected to surpass USD 500 billion by 2030, and with the market for deep learning solutions growing at an average CAGR of over 30%, selecting the right software stack is no longer a matter of preference—it’s a strategic imperative for any forward-thinking AI initiative.

Continue reading to explore the top 10 deep learning software tools powering innovation, automation, and intelligence across the globe in 2026.

Before we venture further into this article, we would like to share who we are and what we do.

About 9cv9

9cv9 is a business tech startup based in Singapore and Asia, with a strong presence all over the world.

With over nine years of startup and business experience, and being highly involved in connecting with thousands of companies and startups, the 9cv9 team has listed some important learning points in this overview of the Top 10 Best Deep Learning Software in 2026.

If you like to get your company listed in our top B2B software reviews, check out our world-class 9cv9 Media and PR service and pricing plans here.

Top 10 Best Deep Learning Software in 2026

- PyTorch

- TensorFlow

- JAX

- Hugging Face

- NVIDIA AI Enterprise

- Databricks Mosaic AI

- DataRobot

- Google Cloud Vertex AI

- Amazon SageMaker

- Microsoft Azure Machine Learning

1. PyTorch

Wikimedia Commons

PyTorch, developed by Meta Platforms, has matured into a global leader in the deep learning software industry. Originally embraced by academic researchers due to its intuitive, Python-based workflow, PyTorch now powers many real-world AI systems thanks to recent upgrades.

Unlike older static graph frameworks, PyTorch uses dynamic computation graphs. This approach, known as “Define-by-Run,” allows users to build and modify models with standard Python code and control structures. Developers can create complex neural networks with greater flexibility, making it particularly suitable for tasks involving RNNs, transformers, and other intricate architectures.

PyTorch in Production: The 2026 Landscape

By 2026, PyTorch is no longer just a research tool. It holds a significant 55% share in production deep learning deployments globally. This shift was fueled by the release of PyTorch 2.x, which introduced torch.compile—a powerful compiler interface built on Triton technology.

This compiler allows developers to optimize their models with little to no code changes. On average, using torch.compilehas been shown to improve performance by 30% to 60%. In single-GPU training scenarios, it can even achieve full GPU utilization.

Benchmarking PyTorch’s Performance in 2026

Here is a detailed benchmarking table showing PyTorch’s technical capabilities and speed enhancements:

| Feature Category | Description / Result |

|---|---|

| Computational Graph Type | Dynamic (Define-by-Run) |

| Debugging Tools | Full compatibility with native Python debugging |

| Compiler Layer | torch.compile with Triton backend |

| Average Speed Boost | 30% – 60% acceleration |

| Inference Acceleration | Up to 2.27x faster with A100 GPUs |

| Training Acceleration | Up to 1.41x faster in multi-GPU scenarios |

| Inference Library | TorchServe, ONNX Runtime integration |

| Max GPU VRAM Utilization | 6.69 GB (for synthetic CNN tasks) |

| Training Time Example | 2.86s per epoch (L4 GPU, batch size 32) |

PyTorch vs Traditional Frameworks

A comparative matrix showcases how PyTorch outperforms or complements older static-graph frameworks such as TensorFlow 1.x or Theano.

| Criteria | PyTorch | Traditional Static Frameworks |

|---|---|---|

| Graph Flexibility | High (Dynamic) | Low (Static) |

| Ease of Debugging | Python-native | Requires special tools |

| Compilation Optimization | torch.compile | XLA / Manual tuning |

| Community Support (2026) | Extensive | Moderate |

| Deployment Readiness | Production-grade | Varies |

| Learning Curve | Beginner-friendly | Steeper |

Adoption by the Research and Engineering Community

Many AI professionals continue to prefer PyTorch for the following reasons:

- It allows rapid prototyping with real-time debugging using standard Python tools.

- Complex architectures such as state space models, GANs, and transformers can be built and tested with fewer lines of code.

- Its growing ecosystem includes hundreds of pre-trained models and integrations with libraries such as HuggingFace Transformers, PyTorch Lightning, and MONAI.

Professional Insight: Robotics Use Case

A senior robotics researcher in 2026 noted that PyTorch’s flexibility remains unmatched when developing real-time control systems. The dynamic graph model allows experimentation without rebuilding models from scratch, which saves time and enhances productivity.

However, some users report that integrating PyTorch outputs into machine learning pipelines using traditional tools like scikit-learn still requires custom wrappers. This gap highlights the need for more seamless interoperability across AI software stacks.

Technical Summary Table: PyTorch in 2026

| Technical Component | Specification / Performance |

|---|---|

| Framework Core | Python-based, dynamic graph execution |

| Compilation Feature | torch.compile (Triton) |

| GPU Optimization | 100% single-GPU utilization potential |

| Distributed Training Tool | torch.distributed (NCCL, Gloo support) |

| High-Throughput Serving | TorchServe and ONNX |

| Model Portability | Supported via TorchScript and ONNX export |

| Training Speed Benchmarks | 1.41x gain (multi-GPU), 2.86s/epoch (single-GPU) |

| Inference Speed Benchmarks | Up to 2.27x gain |

Key Takeaways

- PyTorch is a top deep learning framework in 2026, used widely in both research and commercial applications.

- Its “Define-by-Run” architecture offers unparalleled flexibility for building advanced models.

- The release of PyTorch 2.x and

torch.compiledramatically improved performance, making it suitable for large-scale production use. - Benchmark studies demonstrate significant improvements in speed, memory usage, and GPU efficiency.

- While integration with traditional ML libraries requires additional effort, PyTorch’s growing ecosystem continues to expand its capabilities.

Conclusion

As AI continues to evolve in 2026, PyTorch remains a dominant force in the deep learning software ecosystem. With its combination of developer-friendly tools, advanced performance optimization, and strong community support, PyTorch sets a high standard for what a modern deep learning framework should deliver.

2. TensorFlow

www.tensorflow.org

TensorFlow, developed and maintained by Google, continues to be one of the most powerful and widely adopted deep learning frameworks in 2026. Its strong focus on enterprise applications, scalability, and production-level stability has made it the preferred platform for large organizations, cloud-based AI services, and high-performance model deployment. While other frameworks like PyTorch have gained popularity in research and prototyping, TensorFlow remains the backbone of industrial-grade AI systems.

This section explores TensorFlow’s architecture, real-world performance, tool integrations, and its unmatched position in enterprise-scale machine learning operations.

Enterprise Focus and Global Adoption

TensorFlow is designed with production use cases in mind. It maintains a 38% market share in large-scale deployment environments worldwide. The framework is especially well-suited for companies that need to manage thousands of machine learning models simultaneously across cloud and edge infrastructures. Its support for static computation graphs through a “Define-and-Run” model allows for better optimization, memory control, and execution speed—traits essential for reliable operations in enterprise settings.

Over the years, TensorFlow has improved its flexibility by introducing eager execution in version 2.x. This made the platform more accessible to beginners and prototypers without sacrificing its advanced performance capabilities. However, it continues to stand out in production scenarios where stability, monitoring, and scalability are critical.

Comprehensive Tooling and Ecosystem

TensorFlow comes with a robust and complete ecosystem that supports every stage of the machine learning workflow. This includes:

- TensorFlow Extended (TFX) for production ML pipelines

- TensorFlow Serving for efficient and scalable model inference

- TensorFlow Lite for deploying models on mobile and embedded devices

- Keras for easy model building through a high-level, modular API

- TensorBoard for detailed visualization and debugging

- TensorFlow Hub for reusable machine learning modules

- XLA (Accelerated Linear Algebra) for performance tuning on custom hardware, especially TPUs

TensorFlow Performance and Technical Metrics (2026)

| Component | Specification / Performance Insight |

|---|---|

| Execution Graph | Static (Define-and-Run) with Eager Support |

| Primary Compiler | XLA Compiler (optimized for TPU execution) |

| Inference Engine | TensorFlow Serving / TensorFlow Lite |

| High-Level API | Keras (modular layer stacking, user-friendly) |

| Supported Platforms | CPU, GPU, TPU, Mobile (Android/iOS), Edge Devices |

| Training Time (Synthetic CNN) | 90.88 seconds on L4 GPU |

| Memory Utilization | Max 8.74 GB VRAM for standard CNN task |

| Model Reusability | Strong via TensorFlow Hub |

| MLOps Integration | Deep integration with Google Cloud and TFX pipeline |

TensorFlow’s Strategic Strengths in Cloud and Edge AI

One major reason for TensorFlow’s widespread use in 2026 is its seamless integration with Google Cloud Platform (GCP). Companies running distributed AI workloads on TPUs benefit significantly from the use of the XLA compiler, which merges and fuses graph operations for better throughput and reduced memory load. This makes TensorFlow a top choice for organizations seeking to train large models quickly and cost-effectively on the cloud.

For on-device intelligence, TensorFlow Lite is widely adopted for running inference on mobile phones, microcontrollers, and edge systems. Its optimizations for power and size make it ideal for smart IoT devices, wearables, and embedded applications.

Framework Comparison: TensorFlow vs Other Deep Learning Tools (2026)

| Feature Area | TensorFlow | PyTorch | JAX | HuggingFace Transformers |

|---|---|---|---|---|

| Execution Graph | Static + Eager (Hybrid) | Dynamic | Functional + JIT | Dynamic |

| Production Scalability | Excellent | Improving | Moderate | Moderate |

| Cloud Optimization | GCP + TPU (XLA) | GCP/AWS (CUDA) | TPU-focused | AWS/Various |

| Edge/Mobile Support | TensorFlow Lite | Torch Mobile | Limited | Limited |

| Ecosystem Maturity | Extensive | Strong | Growing | Focused on NLP |

| Beginner-Friendly APIs | Keras | Native Python | Requires Functional Skills | Transformers Library |

| Monitoring & MLOps | TFX, TensorBoard | Weights & Biases, Lightning | Custom Solutions | WandB, Custom |

Industry Testimonial: TensorFlow in Logistics and Global AI Infrastructure

A machine learning engineer from a global logistics corporation shared insights on TensorFlow’s operational strength. The engineer highlighted that TensorFlow is particularly effective when deployed at scale across hundreds or thousands of edge devices. The suite of production-ready tools within the TensorFlow ecosystem—especially TFX and TensorFlow Serving—makes automation and monitoring highly efficient.

While acknowledging that TensorFlow’s lower-level API might require more configuration compared to PyTorch’s intuitive syntax, the engineer emphasized that Keras simplifies the process of building common architectures such as CNNs and LSTMs. This modular approach accelerates development while maintaining enterprise-level stability.

Key Benefits of TensorFlow for Business in 2026

| Benefit Area | Description |

|---|---|

| Stability in Production | Proven reliability for long-term AI operations |

| Full-Stack Integration | Tools for data prep, training, deployment, and monitoring |

| Cross-Platform Portability | From cloud to mobile and embedded hardware |

| High Throughput Training | Optimized for large datasets and hardware acceleration |

| Scalable Inference | TensorFlow Serving handles millions of predictions per day |

| Flexible Development | Keras makes model creation fast and modular |

Conclusion

TensorFlow has firmly positioned itself as the go-to deep learning framework for enterprises in 2026. Its comprehensive tools, optimized performance on TPUs, and full integration with GCP allow organizations to confidently build, deploy, and manage AI models at scale.

3. JAX

In the fast-evolving world of artificial intelligence, JAX has established itself as a powerful tool for researchers who need speed, precision, and control. Unlike conventional deep learning platforms, JAX is not built as a full-stack machine learning solution. Instead, it is designed for high-performance numerical computing, with a focus on composable transformations, functional programming, and seamless hardware acceleration. Developed by Google, JAX is now widely adopted across advanced research fields such as quantum computing, physics simulations, and next-generation AI model development.

Unique Functional Design and Core Philosophy

JAX is built around a functional programming approach, where data is immutable and computations are written in a side-effect-free style. This encourages reproducible and parallelizable code. Its design prioritizes transformation of functions, offering features like:

jax.jitfor Just-In-Time (JIT) compilation to generate optimized machine-level codejax.vmapfor automatic vectorization, enabling batch processing with no manual loopsjax.pmapfor parallel execution across multiple GPUs or TPUs

By extending NumPy’s familiar API with these advanced features, JAX allows researchers to write mathematical operations in pure Python while executing them at top speed on modern hardware.

Growth of the Ecosystem in 2026

Although JAX began with a minimalistic core, its ecosystem has grown significantly. Libraries such as Flax and Haikunow offer neural network abstractions similar to Keras or PyTorch Lightning. These tools help bridge the gap between JAX’s low-level power and high-level usability, allowing faster model development and experimentation.

Despite this growth, JAX is still seen as a framework best suited for experienced users or researchers comfortable with systems programming. Its design requires users to adopt functional patterns like jax.lax.cond instead of Python’s native ifstatements, which can be challenging for beginners but highly rewarding in performance-critical applications.

Technical Benchmark: JAX in Action

| Feature | Description / Outcome |

|---|---|

| Programming Paradigm | Functional (immutable arrays, side-effect-free operations) |

| Compiler | JIT with XLA (just-in-time, machine-level optimization) |

| Parallelization Support | SPMD across accelerators with jax.pmap |

| Vectorization | Automatic via jax.vmap |

| Memory Efficiency | Lowest host RAM usage (3.29 GB in synthetic CNN test) |

| Training Time (Synthetic) | 99.44 seconds (L4 GPU, batch size 32) |

| Small-Scale Overhead | Slower in first run due to compile-first architecture |

| Large-Scale Efficiency | Outperforms other frameworks with repeated use |

| Deployment Flexibility | Limited production tools compared to TensorFlow/PyTorch |

Performance Comparison Table: JAX vs Other Deep Learning Tools (2026)

| Criteria | JAX | PyTorch | TensorFlow |

|---|---|---|---|

| JIT Compilation | First-class (via XLA) | Optional (torch.compile) | Available (XLA) |

| Parallel Execution | Excellent (pmap) | Moderate | High (TF + TPU) |

| Vectorization | Automated (vmap) | Manual batching | Manual batching |

| Memory Footprint | Lowest in class | Moderate | Higher |

| Ease of Use | Steep learning curve | Beginner-friendly | Moderate |

| High-Level API | Via Flax/Haiku | Native | Keras |

| Ecosystem Maturity | Growing | Mature | Mature |

| Use Case Fit | Research & HPC | Research & Production | Enterprise Production |

User Feedback from the Research Community

A computational scientist working in the field of quantum AI research shared positive remarks about JAX, describing it as “incredible” for its simplicity and raw performance. One major advantage noted was the ability to bypass Python’s overhead using JIT compilation, which significantly accelerates training and inference on specialized hardware.

Many researchers transitioning from PyTorch or TensorFlow find JAX’s syntax and functional control flow initially unfamiliar. However, those with backgrounds in systems programming or C-like languages often adapt quickly and appreciate the low-level access and control that JAX provides.

Top Advantages of JAX for Advanced Deep Learning Work

| Benefit Category | Description |

|---|---|

| Performance Efficiency | Optimized execution on GPUs and TPUs using ahead-of-time compilation |

| Composable Architecture | Functional transformations allow for modular code design |

| Automatic Batching | vmap simplifies batch processing for training large models |

| Clean and Testable Code | Functional style enhances reproducibility and debugging |

| Research Flexibility | Ideal for novel architecture design, simulations, and custom math |

| Lightweight Core | Lean framework with no unnecessary abstractions |

Challenges and Limitations

While JAX offers powerful tools for cutting-edge research, it is not yet as complete in production-ready tooling as TensorFlow or PyTorch. Features like built-in deployment pipelines, monitoring tools, or pre-trained model hubs are still limited. As a result, users often build their own wrappers or use JAX in conjunction with external platforms.

The library also requires more familiarity with functional programming principles. For example, instead of using mutable variables and standard control flow, users must rely on jax.lax constructs that operate on pure functions. This creates a learning curve, but also leads to more predictable and parallelizable code execution.

Conclusion

JAX stands out in 2026 as one of the most powerful deep learning frameworks for researchers and computational scientists. Its focus on performance, functional purity, and hardware-level optimization makes it a key tool in domains that require large-scale simulations or innovative model architectures.

Although it is not yet as widely adopted in production environments, JAX is rapidly gaining traction in labs, universities, and specialized AI startups. As its ecosystem continues to expand with libraries like Flax and Haiku, JAX is expected to play an even bigger role in shaping the future of high-performance AI development.

4. Hugging Face

Hugging Face has emerged as one of the top deep learning software platforms in 2026. More than just a software tool, it operates as a global hub for open-source AI development, often compared to the role GitHub plays in software engineering. With its expanding user base, diverse model repository, and enterprise-grade tools, Hugging Face has become essential for companies, researchers, and developers building machine learning solutions in natural language processing (NLP), computer vision, and multimodal AI.

As one of the top 10 deep learning software platforms worldwide in 2026, Hugging Face offers unmatched accessibility, community-driven innovation, and collaboration features, all centered around democratizing artificial intelligence.

Platform Scale and Global Adoption Metrics

Hugging Face serves as a central meeting point for millions of AI developers, organizations, and learners. By early 2026, the platform attracts more than 18 million monthly active visitors, offers over 2.2 million community-contributed models, and supports over 5 million registered users. These figures reflect the explosive rise in open-source AI activity.

A majority of users download smaller models—those under 1 billion parameters—demonstrating a shift toward efficient, lightweight AI systems that can run on mobile and edge devices. This preference aligns with broader industry trends focused on reducing latency, optimizing for privacy, and enhancing on-device performance.

Hugging Face Usage Statistics (2024–2026)

| Metric | Value in 2026 | Explanation |

|---|---|---|

| Monthly Active Visitors | 18 million | Worldwide AI developer and research traffic |

| Registered Active Users | Over 5 million | Individuals contributing or using hosted models |

| Community Models Hosted | More than 2.2 million | Open-source and proprietary models in NLP, CV, and more |

| Daily API Calls | Around 500,000 | Real-time access for inference, fine-tuning, and testing |

| Enterprise Subscriptions | 2,000+ organizations | Companies using Hugging Face for secure deployments |

| Model Download Focus | 92.48% under 1B parameters | Preference for efficiency and on-device inference |

| Top 50 Contributors’ Share of Downloads | 80.22% | Dominance of leading researchers and institutions |

Revenue Growth and Enterprise Usage

Hugging Face has seen rapid revenue expansion, reaching approximately USD 130 million by 2024—nearly doubling from the previous year. This growth is driven by the increasing demand for accessible, high-quality models in enterprise settings.

More than 10,000 companies, including major players like Intel, Pfizer, Bloomberg, and eBay, now use Hugging Face for building AI systems, conducting experiments, or deploying custom solutions. These organizations benefit from enterprise features like private model hosting, secure collaboration environments, and scalable APIs.

Enterprise Features That Set Hugging Face Apart

| Feature | Business Value in 2026 |

|---|---|

| Private Repositories | Secure model hosting for internal development |

| Enterprise Hub | Access to curated models and infrastructure integrations |

| AutoTrain and Inference API | Quick model training and deployment without extensive coding |

| Version Control for Models | Enables collaboration, testing, and rollback functionality |

| Community-Driven Support | Ongoing contributions from top AI labs and developers |

| Multimodal AI Support | Models covering text, vision, audio, and combined inputs |

Framework Comparison: Hugging Face vs Other AI Platforms (2026)

| Feature/Criteria | Hugging Face | TensorFlow | PyTorch | JAX |

|---|---|---|---|---|

| Model Repository | 2.2M+ Models | Limited | Moderate | Limited |

| Collaboration Tools | Built-in | External tools | Manual setup | Minimal |

| Use Case Specialization | NLP, CV, Multimodal | General | General | High-performance |

| Deployment via API | Yes | Custom setup | Custom setup | Limited |

| Open-Source Community Size | Largest | Large | Large | Smaller |

| On-Device Optimized Models | Widely Available | Via TF Lite | Torch Mobile | Not focused |

Real-World Feedback from AI Practitioners

Hugging Face is widely regarded by AI professionals as the go-to platform for open-source deep learning models. In a 2026 review from an AI product manager in the financial technology sector, the platform was praised for its simplicity, breadth of models, and strong community support. Even non-technical users such as IT recruiters found the platform useful for learning and exploring AI capabilities without requiring deep programming knowledge.

However, there are limitations. Due to the open nature of its repository, not all models meet strict enterprise-level standards. Accuracy and quality can vary depending on the source and intended use case. Therefore, businesses are advised to thoroughly validate models internally before integrating them into production environments.

Strengths and Limitations of Hugging Face in 2026

| Category | Strengths | Limitations |

|---|---|---|

| Accessibility | Easy-to-use platform for all user levels | Less structured support for complex enterprise cases |

| Collaboration | Excellent tools for sharing, versioning, and co-creation | Model quality varies widely |

| Community Engagement | Active contributors from academia and industry | Fewer built-in production tools than TF/PyTorch |

| Model Diversity | Massive selection across domains and languages | Requires due diligence for production readiness |

| Revenue Model | Strong enterprise support with freemium tools | Some advanced features are gated behind paywalls |

Conclusion

By 2026, Hugging Face has become one of the top 10 deep learning software platforms, revolutionizing how artificial intelligence is developed, shared, and deployed. With millions of users and models, a robust API infrastructure, and growing enterprise adoption, it stands at the forefront of open-source AI innovation.

Whether for academic research, rapid prototyping, or scalable enterprise deployment, Hugging Face provides the tools, models, and community needed to move AI forward. As the industry continues to evolve, Hugging Face remains a central platform where developers and organizations can collaborate, experiment, and deliver high-impact machine learning applications.

5. NVIDIA AI Enterprise

NVIDIA AI Enterprise has become one of the most trusted and advanced software platforms in the deep learning ecosystem by 2026. It is designed to support the entire artificial intelligence development lifecycle—from training models to deploying them in real-world production environments—while ensuring enterprise-grade security, reliability, and performance.

Built specifically to complement NVIDIA’s industry-dominating GPU hardware, the platform offers a tightly integrated, high-performance solution for organizations working with large-scale data, complex AI models, and mission-critical applications. With the rise of generative AI, computer vision, and intelligent automation across sectors, NVIDIA AI Enterprise is now recognized as a top 10 deep learning software globally.

Comprehensive Software Built Around Hardware Leadership

As of 2026, NVIDIA controls approximately 92–94% of the global GPU market. Leveraging this dominance, the company has developed a software stack that runs optimally on its hardware offerings such as the A100, H100, and the latest H200 GPUs. NVIDIA AI Enterprise includes critical tools like:

- CUDA for GPU computing acceleration

- TensorRT for high-speed model inference

- NeMo for developing and deploying large language and generative models

- cuDNN for deep neural network training

The platform also features secure containers, pre-trained models, SDKs, and APIs that support a wide variety of use cases—ranging from enterprise analytics and autonomous systems to large-scale generative AI.

Bundled Access with Hardware Purchases

NVIDIA’s commercial strategy in 2026 includes bundling the AI Enterprise suite with its premium GPU hardware. Buyers of high-end models like the H100 or H200 often receive a complimentary five-year subscription to the software suite. This ensures organizations can immediately deploy high-performance AI infrastructure without requiring additional investment in software licenses.

Licensing, Pricing Models, and Educational Access

NVIDIA AI Enterprise offers flexible licensing options tailored to different use cases and organization sizes. Enterprises can select subscription plans based on duration, opt for a one-time perpetual license, or purchase access on-demand through cloud marketplaces.

| License Type | Term | Price (Per GPU) | Support Level |

|---|---|---|---|

| Subscription | 1 Year | USD 4,500 | Business Standard |

| Subscription | 3 Years | USD 13,500 | Business Standard |

| Subscription | 5 Years | USD 18,000 | Business Standard |

| Perpetual | Lifetime | USD 22,500 | 5-Year Initial Support |

| Education / Inception | 1 Year | USD 1,125 | For Startups and Labs |

| Cloud On-Demand | Per Hour | USD 1.00/hr | Up to 3 API Calls |

This flexible pricing structure makes it easier for businesses, research labs, and educational institutions to access high-quality deep learning infrastructure that scales with their needs.

Key Features Driving Enterprise Adoption

| Feature Area | Description |

|---|---|

| GPU Acceleration | Native optimization for all NVIDIA GPUs (A100, H100, H200) |

| Full-Stack AI Toolkit | Includes CUDA, TensorRT, NeMo, RAPIDS, cuDNN |

| Enterprise Security & Support | Validated containers, certified deployment pipelines |

| Model Explainability | Offers unencrypted pre-trained models for transparency/debugging |

| Performance Optimization | Built-in auto-tuning for high-throughput inference/training |

| Seamless IT Integration | Easily connects with existing enterprise infrastructure |

| Deployment Flexibility | Available on-premise, hybrid, and through cloud marketplaces |

Technical Comparison Matrix: NVIDIA AI Enterprise vs Other Leading Platforms

| Capability | NVIDIA AI Enterprise | TensorFlow | PyTorch | JAX | Hugging Face |

|---|---|---|---|---|---|

| Optimized for NVIDIA Hardware | Yes | Partial | Partial | Partial | No |

| Enterprise Security | High (certified suite) | Moderate | Community-Driven | Low | Varies |

| Support for Pre-Trained Models | Yes (NeMo, unencrypted) | Yes | Yes | Limited | Extensive (community) |

| Ease of Deployment | High (containers, APIs) | Moderate | Moderate | Low | High (via API) |

| Performance on Large Datasets | Excellent | Good | Good | Very Good | Depends on backend |

| Toolchain Depth | Deep (hardware-software stack) | Moderate | Strong (ecosystem) | Technical, Low-Level | Focused on hosting |

Enterprise Feedback and User Experience Insights

Real-world users—particularly in mid-sized tech companies and large enterprises—report that NVIDIA AI Enterprise delivers unmatched performance when processing vast datasets. Site Reliability Engineers (SREs) specifically appreciate how the suite integrates seamlessly with traditional IT infrastructure, reducing the time needed to deploy AI applications.

The availability of unencrypted pre-trained models has proven valuable for explainability, debugging, and fine-tuning—important features in regulated industries like healthcare and finance.

However, reviews also acknowledge key limitations. The software and hardware are both high-cost, which can be a challenge for smaller businesses or startups with limited budgets. Additionally, the platform has a steeper learning curve compared to more user-friendly tools like Hugging Face or Keras, particularly for teams without strong AI or DevOps experience.

Strengths and Challenges of NVIDIA AI Enterprise

| Category | Strengths | Limitations |

|---|---|---|

| Performance Optimization | Superior acceleration for large-scale training/inference | Requires NVIDIA hardware for best results |

| Security & Compliance | Enterprise-ready with validated AI workflows | Steep learning curve for non-experts |

| Integrated Ecosystem | Full stack from model to deployment | Limited flexibility outside NVIDIA infrastructure |

| Cost Efficiency (at Scale) | Bundled with high-end GPU purchases for large deployments | High upfront licensing and hardware costs |

Conclusion

NVIDIA AI Enterprise stands out in 2026 as the gold standard for organizations seeking a reliable, scalable, and secure AI software infrastructure. Its full-stack integration—from silicon to software—makes it a powerful tool for enterprises building production-level artificial intelligence systems.

By combining industry-leading performance, enterprise-grade support, and compatibility with the world’s most widely used GPUs, NVIDIA AI Enterprise has secured its place among the top 10 deep learning software platforms globally. For businesses with the resources to invest in top-tier AI infrastructure, it offers unmatched capabilities to deploy complex models at scale with confidence and speed.

6. Databricks Mosaic AI

Databricks Mosaic AI has become one of the most important platforms in the global deep learning ecosystem by 2026. Positioned as a unified “Data Intelligence Platform,” Databricks combines advanced machine learning tools with powerful data analytics, enabling companies to manage everything from raw data to AI-powered applications within a single workspace.

Following the acquisition of MosaicML, the platform has gained new capabilities tailored to large-scale model training, AI governance, and secure deployment. As one of the top 10 deep learning software solutions in 2026, Databricks Mosaic AI delivers a balanced combination of data infrastructure, machine learning automation, and enterprise-grade scalability.

A Unified Foundation for AI and Data Operations

Databricks Mosaic AI is built on the open Lakehouse architecture—a hybrid of data lakes and data warehouses. This design allows data engineers, analysts, and AI practitioners to access structured and unstructured data in one place, without the typical fragmentation found in siloed systems.

Mosaic AI serves as the platform’s dedicated suite for building, deploying, and governing machine learning models and AI agents. It includes:

- Mosaic AI Gateway: A unified interface for accessing various foundation models securely

- Mosaic AI Safeguards: Tools that automatically protect sensitive data and enforce ethical usage

- Lakehouse Governance Layer: Centralized policies to manage data access, quality, and compliance

- Real-Time Collaborative Notebooks: Shared development spaces supporting Python, SQL, R, and Scala

These capabilities ensure that teams can collaborate effectively, work across diverse programming languages, and meet both technical and regulatory requirements when building AI systems.

Enterprise-Level Capabilities and Distributed Processing

Databricks is tightly integrated with Apache Spark, which powers its ability to handle vast volumes of data in parallel across distributed systems. This makes it a preferred solution for financial institutions, healthcare organizations, telecom companies, and large technology firms that rely on real-time analytics and AI-driven automation.

Technical Capabilities of Databricks Mosaic AI

| Feature | Description / Outcome |

|---|---|

| Lakehouse Architecture | Combines data lakes and warehouses for unified storage |

| Programming Language Support | Python, SQL, Scala, R within collaborative notebooks |

| Distributed Computing Engine | Built on Apache Spark for scalable parallel processing |

| AI Governance Layer | Controls access, enforces policies for safe AI development |

| Mosaic AI Gateway | Central model query interface across providers |

| Safeguards for Sensitive Data | Automatic PII filtering, usage monitoring |

| Cluster Management Tools | Auto-scaling and auto-termination to optimize cost |

| Deployment Flexibility | On-prem, cloud, and hybrid support |

Feature Matrix: Databricks Mosaic AI vs Other Deep Learning Platforms

| Feature Area | Databricks Mosaic AI | TensorFlow | PyTorch | NVIDIA AI Enterprise | Hugging Face |

|---|---|---|---|---|---|

| Integrated Data Platform | Yes (Lakehouse) | No | No | No | No |

| Distributed Computing | Apache Spark | Manual setup | Manual setup | Hardware-bound | Cloud-hosted |

| Collaborative Notebooks | Yes | Partial (Colab) | Partial (Jupyter) | No | No |

| Real-Time Model Governance | Yes | Partial | No | Yes | No |

| Foundation Model Gateway | Mosaic AI Gateway | None | None | NeMo/Triton | Transformers API |

| Multi-Language Support | Python, SQL, R, Scala | Python only | Python only | Python/C++ | Python |

User Feedback and Real-World Adoption Trends

User reviews on platforms like G2 and Gartner Peer Insights consistently highlight Databricks as one of the most effective tools for enterprise-level AI and data analytics.

A data analyst at a financial services company praised the platform’s real-time collaborative notebooks, which allow teams to code together across departments and languages without version control issues. The centralized nature of Databricks’ data management eliminates duplication and inefficiency, enabling teams to focus on model development and business insights.

One highly mentioned feature is the auto-termination of unused compute clusters, which helps organizations control costs without compromising processing speed. However, users have also noted a few downsides: performance can become sluggish with extremely large datasets, and pricing may be challenging for smaller startups or teams with limited budgets.

Performance and User Satisfaction Metrics (2026)

| Metric | 2026 Value / Rating |

|---|---|

| Overall User Satisfaction | 8.8 / 10 |

| Notebook Collaboration Impact | 266 user mentions as productivity boost |

| Data Processing Scalability | High (via Apache Spark) |

| Safety & Governance Tools | Highly rated for AI policy control |

| Performance Under Load | Moderate (slows on massive datasets) |

| Cost Efficiency for SMBs | Considered expensive by some users |

Strengths and Limitations of Databricks Mosaic AI

| Category | Strengths | Limitations |

|---|---|---|

| Unified Workspace | Combines data, ML, and analytics in one place | May be overpowered for small projects |

| Collaboration Tools | Real-time multi-language notebooks for teams | Can be sluggish with very large data sets |

| Data Governance | Built-in policies for privacy, compliance, and model tracking | Initial setup complexity for less experienced teams |

| Cloud Integration | Supports multi-cloud and hybrid models | Higher operational costs compared to open-source tools |

| Automation and Scaling | Auto-scaling and resource management for Spark clusters | Requires Spark knowledge for advanced optimization |

Conclusion

By 2026, Databricks Mosaic AI has secured its position as a leading deep learning platform, especially for large enterprises seeking a unified solution for data management, machine learning, and AI governance. With powerful distributed computing, real-time collaboration, and strong safeguards for ethical AI use, it is well-suited for industries that demand both performance and compliance.

Among the top 10 deep learning software platforms in the world, Databricks Mosaic AI stands out for its enterprise readiness, collaborative flexibility, and data-centric design. It continues to be a preferred choice for organizations that want to bridge the gap between raw data and intelligent decision-making at scale.

7. DataRobot

DataRobot has firmly positioned itself among the top 10 deep learning software platforms in 2026. Known originally for pioneering AutoML (Automated Machine Learning), the platform has evolved into a powerful “Agent Workforce Platform” designed for enterprise-scale deployment of AI agents, machine learning models, and intelligent automation.

By combining automated model development with enterprise-grade governance and deployment tools, DataRobot enables organizations to maximize the impact of artificial intelligence while reducing operational risk. Its capabilities are especially suited for large companies that demand scalable AI solutions with high accuracy and fast implementation timelines.

Adoption Across Large Enterprises

A defining strength of DataRobot in 2026 is its deep penetration in the large enterprise segment. Approximately 63% of its user base consists of organizations with over 1,000 employees. These companies rely on DataRobot to build and manage predictive models across complex business environments, including finance, healthcare, education, logistics, and retail.

The platform’s pricing reflects its premium positioning. The median annual contract value for enterprise customers is USD 215,200, demonstrating the platform’s focus on high-impact AI initiatives.

Enterprise Usage Metrics and Market Performance

| Metric | Value in 2026 | Description |

|---|---|---|

| Median Annual Buyer Spend | USD 215,200 | Reflects high-value, enterprise-level AI investments |

| Market Share in Predictive Analytics | 6.7% | Competes with Alteryx, Anaplan, and other predictive platforms |

| Organizations with >1,000 Employees | 63% of user base | Indicates strong enterprise adoption |

| Overall User Rating (G2) | 4.7 / 5.0 | Based on thousands of user reviews |

| PeerSpot User Score | 8.2 / 10 | Highlights satisfaction from enterprise IT teams |

| Customer Recommendation Rate | 94% | Strong community endorsement for effectiveness and reliability |

| Fraud Loss Reduction (Case Study) | 80% | Specific outcome from financial sector deployment |

Core Features Enhancing Predictive Modeling

| Capability Area | Feature Description | Enterprise Impact |

|---|---|---|

| AutoML Workflow | End-to-end automation of model creation and tuning | Reduces development time and increases model accuracy |

| AI Governance Tools | Model approval, compliance tracking, and audit features | Ensures responsible AI deployment across industries |

| Multi-Agent Orchestration | Intelligent agents for automating predictions and actions | Supports large-scale automation of repetitive tasks |

| Time-Series Modeling | Built-in forecasting with seasonality and anomaly detection | Useful for finance, operations, and demand planning |

| Real-Time Scoring | Continuous prediction capabilities integrated via API | Enables dynamic decision-making in production environments |

| Custom Model Integration | Supports imported models from R, Python, and external libraries | Enhances flexibility for hybrid AI workflows |

| Cloud and On-Premise Support | Flexible deployment based on regulatory and business needs | Accommodates varying enterprise infrastructure requirements |

Comparison Matrix: DataRobot vs Other Leading Deep Learning Platforms (2026)

| Feature/Platform | DataRobot | TensorFlow | PyTorch | Databricks Mosaic AI | NVIDIA AI Enterprise |

|---|---|---|---|---|---|

| Focus Area | AutoML & AI Agents | General DL | General DL | Unified Data & AI | GPU-optimized DL |

| Enterprise Automation | Yes | No | No | Partial | Yes |

| Predictive Modeling (AutoML) | Strong | Manual | Manual | Moderate | Partial (NeMo NLP) |

| AI Governance | Advanced | Limited | Limited | Advanced | Strong |

| Time-Series Forecasting | Native support | Requires coding | Requires coding | Supported via packages | Not a focus |

| Prebuilt AI Agents | Yes | No | No | No | No |

| Deployment Flexibility | Cloud & On-Prem | Cloud, Edge | Cloud, Edge | Cloud & Hybrid | Cloud, On-Prem |

Practical Use Cases and User Feedback

DataRobot is used by many professionals across industries to automate complex tasks such as predicting student enrollment, identifying fraud, and forecasting patient admissions. One senior data scientist in higher education shared that the platform could detect anomalies and flag inconsistent student records in minutes—tasks that previously took hours to complete manually.

Another user in the healthcare sector praised the platform for accelerating the development of predictive models and delivering higher accuracy than manual coding approaches. These real-world applications reflect DataRobot’s ability to increase efficiency, accuracy, and time-to-value for AI-driven decision-making.

However, some small and mid-sized organizations report that the platform’s high pricing can be a challenge. For teams with limited budgets, the cost may be a barrier to adoption, especially when evaluating against open-source or freemium alternatives. Additionally, like most enterprise platforms, there is a learning curve for new users unfamiliar with AI lifecycle management tools.

Strengths and Limitations of DataRobot in 2026

| Category | Strengths | Limitations |

|---|---|---|

| Predictive Accuracy | Consistently improves outcomes with automated tuning | Requires internal validation for high-risk use cases |

| Workflow Automation | End-to-end automation saves time across the AI lifecycle | High upfront cost for smaller businesses |

| Platform Usability | No-code and low-code tools for business analysts | Advanced customization requires some ML expertise |

| AI Governance | Built-in compliance and audit controls | May be overly complex for basic AI tasks |

| Scalability | Supports large data pipelines and concurrent model training | Performance varies depending on deployment environment |

Conclusion

DataRobot in 2026 is a comprehensive enterprise AI automation platform that helps organizations scale machine learning initiatives while maintaining governance, efficiency, and accuracy. With features tailored for time-series forecasting, AutoML pipelines, and AI agent orchestration, it serves as a powerful tool for enterprises aiming to deliver intelligent predictions across departments.

Its premium pricing reflects the high value it delivers in terms of automation and predictive performance. As one of the top 10 deep learning software platforms in the world, DataRobot continues to drive enterprise AI transformation by making complex machine learning workflows easier, faster, and more impactful.

8. Google Cloud Vertex AI

Google Cloud Vertex AI has become one of the most reliable and advanced deep learning platforms in 2026. It is recognized globally for offering a complete and seamless machine learning environment—covering data preparation, model training, evaluation, deployment, and monitoring—all within one unified system.

Unlike fragmented ML workflows that require switching between tools, Vertex AI enables companies to move smoothly from development to production in a fully integrated pipeline. Its built-in compatibility with Google’s cloud ecosystem, BigQuery, and Gemini foundation models makes it an ideal choice for organizations focused on performance, scalability, and cost transparency.

Core Platform Capabilities and Cloud-Native Architecture

Vertex AI delivers a flexible and cloud-native environment that connects closely with other Google Cloud services. One of its biggest advantages in 2026 is the direct integration with Google’s own foundational models, such as the Gemini 2.5 family. These models offer capabilities across tasks like text generation, multimodal processing, and chat-based AI, all natively accessible from within Vertex AI.

The platform allows for both no-code AutoML solutions and fully customizable training workflows. Users can select their preferred computing infrastructure (CPU, GPU, or TPU) and scale up or down as needed. It supports both real-time and batch predictions and offers enterprise-grade tools for model monitoring, explainability, and security.

Vertex AI Usage and Pricing Overview (2026)

The pricing structure of Vertex AI is usage-based and designed for transparency. Organizations are charged based on how much compute, storage, and model interaction they use, allowing for detailed control over budget and scaling.

| Service Type | Pricing Metric | Cost in USD (2026) |

|---|---|---|

| AutoML Model Training | Per Node Hour | 3.465 |

| Custom Model Training | Per Hour (Global) | 21.25 |

| Gemini 2.5 Pro (Text Input) | Per 1 Million Tokens | 1.25 |

| Gemini 2.5 Pro (Text Output) | Per 1 Million Tokens | 10.00 |

| Text/Chat Generation | Per 1,000 Characters | 0.0001 |

| NVIDIA Tesla T4 GPU | Per Hour | 0.4025 |

| NVIDIA H100 (80GB) | Per Hour | 9.796 |

| NVIDIA H200 (141GB) | Per Hour | 10.708 |

This detailed cost granularity enables users to optimize spending by selecting the right resource type for the right task. For example, lightweight experiments can be run using lower-tier GPUs, while final training for large models can utilize high-end H100 or H200 GPUs.

Feature Summary of Google Vertex AI

| Feature Category | Description | Business Impact |

|---|---|---|

| Full ML Lifecycle Support | Covers data ingestion to model deployment | Streamlined AI development process |

| Integration with BigQuery | Native support for querying and connecting datasets | Saves time in accessing and prepping data |

| Support for Gemini Models | Built-in access to Google’s Gemini 2.5 foundation models | High-performance generative AI out-of-the-box |

| No-Code and Code-Based Tools | Options for AutoML and custom ML pipelines | Accessible to both beginners and advanced users |

| Cloud Compute Optimization | Flexible use of T4, H100, H200 GPUs | Scales with workload demands |

| Inference and Monitoring | Real-time endpoints and logging | Ensures performance tracking and reliability |

| Usage-Based Pricing | Costs based on compute, tokens, and storage | Transparent budgeting for AI teams |

Platform Comparison: Vertex AI vs Other Leading Deep Learning Platforms

| Key Feature | Vertex AI | TensorFlow | PyTorch | Hugging Face | Databricks Mosaic AI |

|---|---|---|---|---|---|

| Unified Workflow (End-to-End) | Yes | Partial | Partial | No | Yes |

| Foundation Model Access | Gemini 2.5 | None | None | Transformers API | Mosaic AI Gateway |

| AutoML Capabilities | Native | Basic (via Keras) | No | No | Partial |

| Cloud-Native Deployment | Yes (GCP) | Limited | Manual | Cloud-hosted | Cloud and Hybrid |

| Real-Time Inference | Yes | Yes (TF Serving) | Yes (TorchServe) | Yes (API) | Yes |

| Pricing Flexibility | High (usage-based) | Variable | Variable | Depends on usage | Subscription-based |

| Ease of Use | High | Medium | Medium | High | Medium |

User Feedback and Real-World Applications

Machine learning engineers and data scientists report high satisfaction when using Vertex AI, especially due to its easy integration with Google Cloud Storage and other GCP services. In real reviews, professionals highlight that Vertex AI simplifies the process of taking models from prototype to production by offering a consistent interface and built-in optimization tools.

One ML engineer from a retail startup noted that the platform’s intuitive dashboard, automatic model tracking, and seamless pipeline creation saved their team several weeks of manual coding and configuration work. Users also appreciated the fine-grained control over training workflows and real-time endpoint management.

However, one frequently mentioned limitation is the absence of a “scale-to-zero” feature. This means that even when deployed endpoints are idle, users still incur infrastructure charges, making it less ideal for teams with sporadic or seasonal usage patterns.

Strengths and Challenges of Vertex AI in 2026

| Category | Strengths | Challenges |

|---|---|---|

| Workflow Efficiency | Unified environment streamlines all ML tasks | Lacks scale-to-zero for cost optimization in idle periods |

| Model Access | Gemini models embedded for rapid deployment | Custom model hosting may require manual configuration |

| Developer Experience | Intuitive UI and code support for all skill levels | Can be overkill for simple, small-scale experiments |

| Pricing Transparency | Usage-based billing with detailed breakdowns | Complex pricing for larger generative models |

| Cloud Ecosystem | Deep GCP integration improves data pipeline performance | Tied to Google Cloud, less flexible for multi-cloud users |

Conclusion

In 2026, Google Cloud Vertex AI stands out as one of the most comprehensive and user-friendly platforms in the deep learning space. It supports the entire machine learning lifecycle, offers access to advanced foundation models, and is well-integrated with cloud infrastructure—making it an ideal choice for enterprises, startups, and research teams alike.

With its usage-based pricing, seamless integration with BigQuery and Gemini models, and support for both AutoML and custom development, Vertex AI earns its place among the top 10 deep learning software platforms in the world. Its focus on usability, scalability, and intelligent automation makes it a strong contender for any AI-driven organization aiming to deploy reliable, high-performing machine learning systems in the cloud.

9. Amazon SageMaker

Amazon SageMaker remains a dominant force in the global deep learning and machine learning landscape in 2026. It is the most widely adopted managed AI platform, chosen by more than 59% of practitioners using AWS as their primary cloud infrastructure. Built to support every stage of the machine learning lifecycle, SageMaker offers unmatched scalability, tight integration with the AWS ecosystem, and advanced deployment tools for real-time and batch-based inference.

Positioned as one of the top 10 deep learning software platforms in the world, Amazon SageMaker serves a diverse range of industries—from e-commerce and finance to manufacturing and healthcare—by making it easier for teams to build, train, and deploy models at scale.

End-to-End Machine Learning Capabilities

Amazon SageMaker provides a full suite of tools that cover data labeling, feature engineering, model development, experimentation, versioning, monitoring, and deployment. It supports both code-first development for expert data scientists and low-code/no-code interfaces for business analysts.

Key components include:

- SageMaker Ground Truth: For automated and manual data labeling

- SageMaker Studio: An integrated development environment (IDE) for building and managing ML workflows

- SageMaker Canvas: A no-code platform for business users to create models without writing code

- SageMaker Forecast: Purpose-built for automated time-series prediction

- SageMaker Pipelines: Native MLOps tool for CI/CD workflows

- SageMaker Model Monitor: Real-time drift detection and model quality tracking

Feature Summary of Amazon SageMaker in 2026

| Capability Area | Description | Impact on ML Workflow |

|---|---|---|

| Data Labeling | SageMaker Ground Truth with built-in automation | Faster and more accurate data preparation |

| Development Environment | SageMaker Studio IDE and Canvas for no-code use | Enables collaboration between tech and non-tech teams |

| Model Deployment Options | Real-time, batch, and multi-model endpoints | Scales AI apps quickly and efficiently |

| Cost Management | Free tier with 4,000 API requests, detailed pricing tiers | Encourages early experimentation at lower cost |

| MLOps Integration | Pipelines, feature store, registry, and monitoring tools | Full automation of model versioning and lifecycle control |

| Cloud Integration | Native access to AWS services (S3, EC2, Lambda, IAM) | Seamless interoperability with existing AWS infrastructure |

| Performance Optimization | GPU, CPU, and inference optimization support | Delivers faster training and lower latency predictions |

Cost and Resource Flexibility

Amazon SageMaker’s pricing is structured to support a wide range of workloads. While it offers a generous free tier for new users (up to 4,000 API calls during the first 12 months), its pay-as-you-go pricing across compute, storage, and inference services enables businesses to scale based on real-time needs.

| Pricing Model | Description | Benefit for Users |

|---|---|---|

| Free Tier | 4,000 API calls and storage for 12 months | Low-risk experimentation for new users |

| On-Demand Pricing | Per-second billing based on usage | Flexible budgeting and resource allocation |

| Multi-Model Hosting | Shared infrastructure for multiple models | Reduces deployment cost for large model sets |

| Reserved Instances | Prepaid capacity for predictable workloads | Cost savings for long-term projects |

Platform Comparison: SageMaker vs Other Deep Learning Platforms (2026)

| Key Features | Amazon SageMaker | Google Vertex AI | PyTorch | Hugging Face | Databricks Mosaic AI |

|---|---|---|---|---|---|

| Cloud-Native ML Stack | Yes (AWS-native) | Yes (GCP-native) | No | No | Yes (Spark-native) |

| Managed Model Deployment | Yes (multi-modal) | Yes | Partial | No | Partial |

| MLOps Pipeline Support | Native with Pipelines | Moderate | Requires 3rd-party | No | Native workflows |

| IDE and No-Code Tools | Studio + Canvas | Vertex Workbench | Jupyter (external) | Not provided | Notebooks only |

| Integration with Cloud Services | Deep AWS integration | Deep GCP integration | Requires setup | No integration | Native Spark/Azure |

| Beginner Usability | Moderate | High | Low to Medium | High | Moderate |

| Support and Documentation | Highly rated | Highly rated | Community-driven | Community-driven | High enterprise support |

User Feedback from Real-World Deployments

Professionals across industries report that Amazon SageMaker offers outstanding performance in managing the full AI lifecycle. One lead AI engineer in the e-commerce industry praised the platform for its responsive support, deep integration with AWS services, and detailed documentation. According to user reviews on Gartner and G2, SageMaker ranks high for reliability, deployment speed, and support quality.

Many teams appreciate the ability to deploy multi-model endpoints, which significantly reduces infrastructure costs and streamlines scaling. The platform’s flexibility allows enterprises to train and serve models of different sizes and types under a single endpoint.

However, several users noted that SageMaker’s interface may feel complex for newcomers. While Studio offers powerful capabilities, mastering its full feature set requires a learning curve. Some users also pointed out that calculating the total cost of ownership can be difficult due to the platform’s extensive configuration and pricing options.

Strengths and Weaknesses of Amazon SageMaker in 2026

| Category | Strengths | Weaknesses |

|---|---|---|

| Workflow Automation | Seamless end-to-end ML lifecycle management | Steeper learning curve for new users |

| Cloud Compatibility | Deep integration with AWS ecosystem | Less ideal for teams on non-AWS cloud infrastructure |

| Deployment Speed | Real-time and multi-model endpoints simplify rollout | Requires configuration expertise for advanced options |

| User Support | Rated highly for service and global documentation | Interface not as intuitive as Vertex AI or Hugging Face |

| Cost Flexibility | Free tier, reserved pricing, and dynamic scaling options | Harder to forecast total cost for sporadic workloads |

Conclusion

In 2026, Amazon SageMaker continues to lead the market for managed deep learning services, empowering enterprises with a complete AI development and deployment platform. Its full-stack integration with AWS services, combined with advanced automation, support for MLOps, and scalable hosting options, makes it ideal for teams looking to move fast while staying in control of cost and performance.

As one of the top 10 deep learning software platforms in the world, Amazon SageMaker stands out for its reliability, flexibility, and enterprise-readiness—helping companies of all sizes turn their machine learning projects into production-ready AI applications.

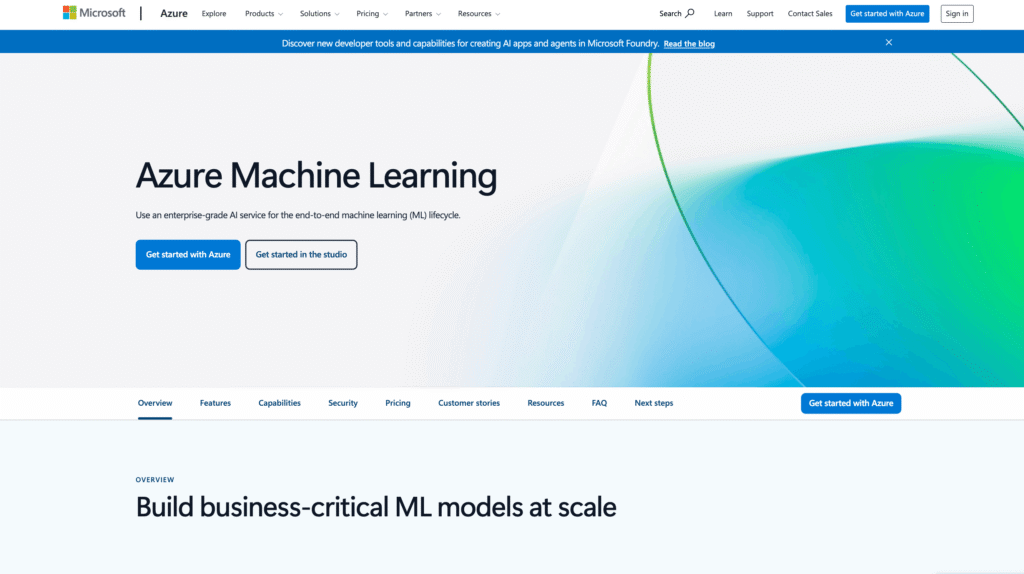

10. Microsoft Azure Machine Learning

Microsoft Azure Machine Learning (Azure ML) has grown into one of the most secure, scalable, and enterprise-ready deep learning platforms in 2026. With robust integration across Microsoft’s wider ecosystem—including Azure Cloud, Microsoft 365, Teams, Power BI, and Azure Active Directory—Azure ML empowers organizations to manage the entire AI development lifecycle from a single, trusted environment.

As one of the top 10 deep learning software platforms globally, Azure ML is widely adopted by large enterprises, especially in regulated industries such as banking, insurance, healthcare, and government. Its security features, flexibility across virtual machine (VM) types, and support for high-performance AI training make it a reliable platform for both experimental and mission-critical applications.

Comprehensive AI Lifecycle Management in a Secure Environment

Azure ML provides an end-to-end framework that covers every stage of AI development—from data ingestion and preprocessing to training, tuning, deploying, and monitoring machine learning models. The platform supports a wide variety of development environments, including low-code/no-code experiences, Jupyter Notebooks, CLI, SDKs, and drag-and-drop ML pipelines.

Key benefits include:

- Deep integration with Azure services such as Blob Storage, Azure DevOps, Kubernetes, and Synapse Analytics

- Pre-built ML pipelines for classification, forecasting, anomaly detection, and image processing

- Flexible training options, including AutoML, custom containers, and distributed learning

- Enterprise governance tools, such as version-controlled model registries, endpoint monitoring, and access management via Active Directory

Azure VM Pricing Structure for AI Workloads (2026)

Azure’s pricing for AI workloads is based on the type of virtual machine (VM) used. Each SKU category is optimized for a specific use case, and customers can choose between on-demand or Reserved Instances to manage costs.

| VM SKU Category | Starting Hourly Price (USD) | Best Use Case |

|---|---|---|

| General Purpose (B-series) | 0.0198 | Development and testing environments |

| Compute-Optimized | 0.0846 | Large-scale batch processing |

| Memory-Optimized | 0.126 | In-memory analytics and processing |

| GPU-Enabled | 0.90 | Deep learning and AI model training |

| Storage-Optimized | 0.624 | Data warehousing and large datasets |

| High-Performance (HPC) | 0.796 | Scientific computing and simulations |

Organizations that commit to Reserved Instances over three years can receive up to 62% cost savings, making Azure ML a cost-effective choice for long-term projects.

Support Tiers for Enterprise Needs

Microsoft offers multiple support plans to meet diverse customer needs, ranging from free tier access to premium enterprise-level support:

| Support Plan | Features Included |

|---|---|

| Basic (Free Tier) | Access to documentation, community forums |

| Developer Support | Technical support during business hours |

| Standard Support | 24/7 support with 1-hour response for critical cases |

| Professional Direct | Faster response times and architecture guidance |

| Unified Enterprise | 24/7 critical support with 15-minute response and a dedicated TAM |

Core Capabilities of Azure Machine Learning in 2026

| Functional Area | Description | Business Impact |

|---|---|---|

| Full Lifecycle Coverage | Supports data ingestion, model training, deployment, and monitoring | Reduces need for external tools and integrations |

| Enterprise Integration | Connects with Microsoft Teams, 365, Power BI, Synapse | Aligns AI with business workflows |

| Security and Compliance | Role-based access control, encryption, auditing | Enables safe AI usage in regulated industries |

| High-Performance Computing | Support for GPUs, distributed learning, and auto-scaling | Accelerates complex training tasks |

| Flexible Development | Code-first and no-code environments for all skill levels | Empowers both data scientists and business users |

| Model Monitoring | Real-time metrics, drift detection, logging | Ensures reliable model performance in production |

Comparison Matrix: Azure ML vs Leading Deep Learning Platforms (2026)

| Platform Feature | Azure Machine Learning | Google Vertex AI | Amazon SageMaker | Databricks Mosaic AI | NVIDIA AI Enterprise |

|---|---|---|---|---|---|

| Cloud Ecosystem Integration | Deep (Azure-native) | Deep (GCP-native) | Deep (AWS-native) | Native Spark on Azure | Tied to NVIDIA GPUs |

| HPC & GPU Support | Yes (VMs, H100, A100) | Yes | Yes | Limited | Yes |

| Cost Management Options | Reserved Instances | Usage-based | Free + Tiered | Subscription-based | Bundled with hardware |

| Governance and Compliance | Strong (AD, logging) | Moderate | Moderate | Strong | Strong |

| Enterprise App Integration | 365, Teams, Power BI | BigQuery | S3, Lambda | SQL, Spark | Partial |

| Deployment Flexibility | Hybrid, Cloud, Edge | Cloud only | Cloud & On-Prem | Cloud & Hybrid | On-Prem & Cloud |

Enterprise Feedback and Real-World Applications

Professionals in banking, healthcare, and manufacturing industries consistently highlight Azure ML’s strengths in security, scalability, and data governance. A review from a data & analytics manager in the banking sector emphasized how the platform plays a vital role in fraud detection, with models automatically identifying suspicious claims, freeing up analysts to focus on high-risk cases.

Another key benefit reported by users is the platform’s seamless integration with Microsoft’s productivity suite. Teams can easily trigger AI workflows from within familiar applications like Excel or Teams, which streamlines adoption across departments.

However, some reviewers have noted steep learning curves, especially for teams unfamiliar with Azure’s ecosystem. Users also mention that frequent updates and changes in Azure’s product naming and user interface can occasionally create confusion, particularly in long-term deployments.

Advantages and Challenges of Azure ML in 2026

| Category | Key Advantages | Potential Challenges |

|---|---|---|

| Enterprise Security | Strong compliance, encryption, and user management | May be excessive for small-scale or personal projects |

| Flexibility | Extensive VM options, from dev to HPC environments | Complex setup for new users unfamiliar with Azure |

| Cost Optimization | Long-term pricing discounts via Reserved Instances | Harder to estimate total cost without careful planning |

| Support Quality | Fast response with Unified Enterprise tier | Premium support tiers may be costly for smaller businesses |

| Workflow Efficiency | Full ML lifecycle in one platform | UI changes may disrupt long-term project continuity |

Conclusion

In 2026, Microsoft Azure Machine Learning continues to be a trusted platform for enterprises looking to build, deploy, and manage AI solutions with full security, compliance, and governance. It is especially valued in regulated sectors that demand robust infrastructure and end-to-end visibility into the machine learning lifecycle.

As one of the top 10 deep learning software platforms in the world, Azure ML delivers a powerful combination of flexibility, security, and integration—making it an ideal choice for large organizations pursuing scalable and responsible AI transformation.

Deep Learning Market Outlook in 2026: Growth, Regional Dynamics, and Sector Trends

The global deep learning industry in 2026 is experiencing extraordinary expansion, supported by significant investments, technological advances, and increasing enterprise adoption across critical sectors. This expansion is reshaping both regional dominance and vertical distribution, with North America leading in total market value and the Asia-Pacific region emerging as the fastest-growing geographic zone.

Backed by consistent growth indicators and new use cases, the economic landscape of deep learning is projected to evolve rapidly between 2026 and 2034. This overview highlights macroeconomic trends, regional developments, component-level breakdowns, and high-value industry applications—all essential for understanding the current and future state of the global deep learning software ecosystem.

Global Market Size and Projected Growth

The overall deep learning market is growing at a Compound Annual Growth Rate (CAGR) ranging between 26.2% and 32.7%, depending on region and application area. This growth is closely linked to broader advances in artificial intelligence, particularly machine learning and foundation models.

| Market Segment | 2024/2025 Value | 2030/2034 Projection | CAGR (%) |

|---|---|---|---|

| Global Deep Learning Market | USD 25.5 Billion (2024) | USD 261.3 Billion (2034) | 26.2% |

| Global Machine Learning Market | USD 113.10 Billion (2025) | USD 503.40 Billion (2030) | 34.8% |

| North America Market Share | 33.9% (2025 est.) | Approaching 40% (2030) | N/A |

| Asia-Pacific Growth Rate | N/A | N/A | 37.2% |

| Software Component Share | 46.1% – 46.6% (2025) | N/A | N/A |

The total valuation of the global deep learning software and infrastructure market is expected to surpass USD 261 billion by 2034. This tenfold increase from 2024’s USD 25.5 billion base highlights the growing dependence of industries on intelligent systems, including neural network-based decision engines, autonomous agents, and multi-modal AI platforms.

Regional Dynamics: North America and Asia-Pacific

North America continues to dominate the deep learning market in terms of revenue and infrastructure maturity. By early 2025, it held 33.9% of global market share, and projections indicate it may reach close to 40% by 2030. This growth is driven by strong adoption across U.S. enterprises, advanced research ecosystems, and leading cloud providers such as AWS, Google Cloud, and Microsoft Azure.

Meanwhile, the Asia-Pacific region is witnessing accelerated expansion, primarily fueled by large-scale investments in AI infrastructure from China, India, and the United Arab Emirates. A CAGR of 37.2% positions this region as the fastest-growing AI market globally. Government-backed AI missions, 5G rollouts, and national compute platforms contribute significantly to this momentum.

| Region | Current Market Share | 2030+ Growth Potential | Key Drivers |

|---|---|---|---|

| North America | 33.9% (2025 est.) | ~40% by 2030 | Enterprise AI, cloud maturity, regulatory clarity |

| Asia-Pacific | Fast-growing | 37.2% CAGR through 2030 | Public/private funding, digital adoption, AI labs |

| Europe | Moderate | Slower relative growth | GDPR compliance, AI Act, academic research |

| Middle East & Africa | Emerging | High-growth potential | Smart city projects, sovereign AI initiatives |

Software as a Core Market Component

Within the deep learning industry, software remains the primary revenue generator, accounting for between 46.1% and 46.6% of total component-level market share. This includes frameworks, platforms, APIs, model hubs, orchestration tools, and proprietary inference engines.

As deep learning models become more modular and cloud-native, the value of flexible, interoperable software platforms continues to rise. Technologies such as AutoML, edge AI deployment tools, multi-agent orchestration layers, and model monitoring systems are central to enterprise strategies in 2026.

Application Distribution by Sector

Revenue distribution across application verticals in 2026 remains concentrated in industries with high complexity and data sensitivity. Image recognition leads the way, especially within healthcare diagnostics, industrial quality control, and automotive automation.

| Industry Application Area | Share of Application-Based Revenue (2026) | Description |

|---|---|---|

| Image Recognition | 43.2% | Used in radiology, manufacturing QA, surveillance, autonomous vehicles |

| Automotive (ADAS & AV) | 39.6% | Deep neural networks for self-driving systems and advanced driver assistance |

| Healthcare AI | ~28% (estimated) | Predictive diagnostics, personalized medicine, workflow automation |

| Financial Services | ~19% (estimated) | Fraud detection, credit scoring, algorithmic trading |

| Retail and E-commerce | ~16% (estimated) | Demand forecasting, recommendation engines, visual search |

The automotive industry, in particular, has emerged as one of the largest beneficiaries of deep learning. Neural networks are fundamental to enabling autonomous vehicle navigation, sensor fusion, real-time decision-making, and Advanced Driver-Assistance Systems (ADAS).

Key Takeaways on Deep Learning Software Market in 2026

| Insight Area | Market Status (2026) | Strategic Implication |

|---|---|---|

| Global Market Growth | CAGR 26.2% to 32.7% through 2030 | Significant investment opportunities in AI platforms |

| North America Dominance | 33.9% share, rising to 40% | U.S. continues to lead in adoption and infrastructure maturity |

| Asia-Pacific Acceleration | 37.2% CAGR | Key expansion area for AI vendors and investors |

| Software as Growth Driver | 46.1%–46.6% of total revenue | Indicates rising demand for modular, cloud-based AI solutions |

| Application Concentration | Image recognition & automotive lead sector | Reflects focus on safety-critical and high-ROI AI use cases |

Conclusion

In 2026, the global deep learning software ecosystem is entering a phase of rapid scale and strategic significance. North America retains financial and infrastructure leadership, while the Asia-Pacific region is setting the pace for adoption and innovation. Software remains the dominant component, powering a range of use cases across autonomous vehicles, healthcare diagnostics, and real-time analytics.

With major players investing in AI compute infrastructure, cross-platform interoperability, and responsible AI practices, the global market for deep learning is set to redefine industries throughout the decade ahead. The tools and platforms leading this transformation—like PyTorch, TensorFlow, Hugging Face, and Azure ML—are at the center of this growth story.

Performance Benchmarking of Deep Learning Software in 2026: Speed, Efficiency, and Model Serving Capabilities